Sometimes, when it comes to using artificial intelligence in journalism, people think of a calculator, an accepted tool that makes work faster and easier.

Sometimes, they think it’s flat-out cheating, passing off the work of a robot for a human journalist.

Sometimes, they don’t know what to think at all — and it makes them anxious.

All of those attitudes emerged from new focus group research from the University of Minnesota commissioned by the Poynter Institute about news consumers’ attitudes toward AI in journalism.

The research, conducted by Benjamin Toff, director of the Minnesota Journalism Center and associate professor of Minnesota’s Hubbard School of Journalism & Mass Communication, was unveiled to participants at Poynter’s Summit on AI, Ethics and Journalism on June 11. The summit brought together dozens of journalists and technologists to discuss the ethical implications for journalists using AI tools in their work.

“I think it’s a good reminder of not getting too far ahead of the public,” Toff said, in terms of key takeaways for newsrooms. “However much there might be usefulness around using these tools … you need to be able to communicate about it in ways that are not going to be alienating to large segments of the public who are really concerned about what these developments will mean for society at large.”

The focus groups, conducted in late May, involved 26 average news consumers, some who knew a fair amount about AI’s use in journalism, and some who knew little.

Toff discussed three key findings from the focus groups:

- A background context of anxiety and annoyance: People are often anxious about AI — whether it’s concern about the unknown, that it will affect their own jobs or industries, or that it will make it harder to identify trustworthy news. They are also annoyed about the explosion of AI offerings they are seeing in the media they consume.

- Desire for disclosure: News consumers are clear they want disclosure from journalists about how they are using AI — but there is less consensus on what that disclosure should be, when it should be used and whether it can sometimes be too much.

- Increasing isolation: They fear that increased use of AI in journalism will worsen societal isolation among people and will hurt the humans who produce our current news coverage.

Anxious and annoyed

While some participants saw AI as part of an age-old cycle where a disruptive technology changes an industry, or a society, others had bigger concerns.

“Because I’ve done some computer security work, I do believe in the intrinsic evil of people to do bad things, using any tool they can lay their hands on, including AI,” said a participant named Stewart. “And that’s what I think we’ve got to protect ourselves against, you know?”

Others felt besieged by AI offerings online.

“I’ve noticed it more on social media, like it’s there. ‘Do you want to use this AI function?’ and it’s right there. And it wasn’t there that long ago. … It’s almost like, no, I don’t want to use it! So it’s kind of forced on you,” said a participant named Sheila.

Most participants already expressed a distrust of the news media, and felt the introduction of AI could make things worse.

All of that was a concern for Alex Mahadevan, director of Poynter’s MediaWise media literacy program, who helped organize the summit and the research.

“Are we going to make a mistake and start rolling out all the AI things we’re doing, and instead of sparking wonder in our audiences, we’re going to annoy them?” he asked. Mahadevan is a proponent of using AI ethically to improve the ways journalists can serve their audience, such as finding new ways to personalize news or creating more Instagram Reels to supplement more stories, rather than the smaller amount a human team could produce.

Is it cheating?

A notable finding of the focus groups was that many participants felt certain AI use in creating journalism — especially when it came to using large language models to write content — seemed like cheating.

“I think it’s interesting if they’re trying to pass this off as a writer, and it’s not. So then I honestly feel deceived. Because yeah, it’s not having somebody physically even proofing it,” said focus group member Kelly.

Most participants said they wanted to know when AI was used in news reports — and disclosure is a part of many newsroom AI ethics policies.

But some said it didn’t matter for simple, “low stakes” content. Others said they wanted extensive citations, like “a scholarly paper,” whether they engaged with them or not. Yet others worried about “labeling fatigue,” with so much disclosure raising questions about the sources of their news that they might not have time to digest it all.

“People really felt strongly about the need for it, and wanting to avoid being deceived,” said Toff, a former journalist whose academic research has often focused on news audiences and the public’s relationship with news. “But at the same time, there was not a lot of consensus around how much or precisely what the disclosure should look like.”

Previous research Toff conducted with Felix Simon of the Reuters Institute actually showed a paradox where the audience wanted news organizations to disclose their use of AI, but then viewed them more negatively knowing they had used it.

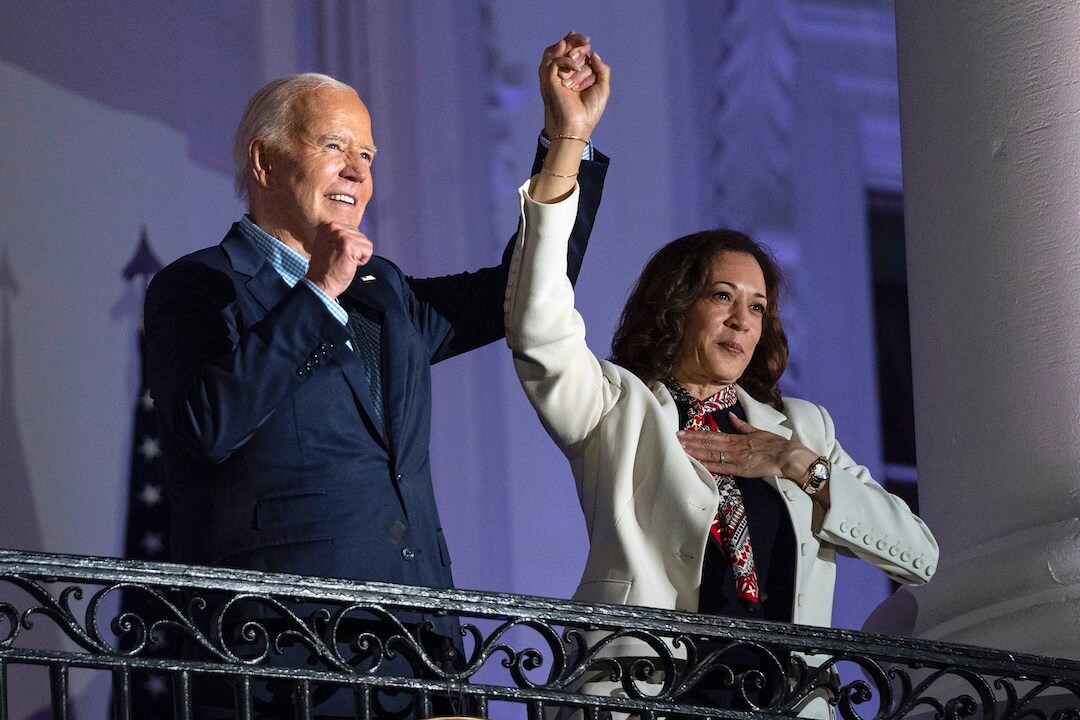

Benjamin Toff of the University of Minnesota (right) speaks at Poynter’s Summit on AI, Journalism and Ethics, on a panel with (l-r) Alex Mahadevan of MediaWise, Joy Mayer of Trusting News and Phoebe Connelly of The Washington Post. Credit: Alex Smyntyna/Poynter

Some of the focus group participants made a similar point, Toff said. “They didn’t actually believe (newsrooms) would be disclosing, however much they had editorial guidelines insisting they do. They didn’t believe there would be any internal procedures to enforce that.”

It will be vitally important how journalists tell their audiences what they are doing with AI, said Kelly McBride, Poynter’s senior vice president and chair of the Craig Newmark Center for Ethics and Leadership. And they probably shouldn’t even use the term AI, she said, but instead more precise descriptions of the program or technology they used and for what.

For example, she said, explain that you used an AI tool to examine thousands of satellite images of a city or region and tell the journalists what had changed over time so they could do further reporting.

“There’s just no doubt in my mind that over the next five to 10 years, AI is going to dramatically change how we do journalism and how we deliver journalism to the audience,” McBride said. “And if we don’t … educate the audience, then for sure they are going to be very suspicious and not trust things they should trust. And possibly trust things they shouldn’t trust.“

The human factor

A number of participants expressed concern that a growing use of AI would lead to the loss of jobs for human journalists. And many were unnerved by the example Toff’s team showed them of an AI-generated anchor reading the news.

“I would encourage (news organizations) to think of how they can use this as a tool to take better care of the human employees that they have. So, whether it’s to, you know, use this as a tool to actually give their human employees … the chance to do something they’re not getting enough time to do … or to grow in new and different ways,” said participant Lyle, who added that he could see management “using this tool to find ways to replace or get rid of the human employees that they have.”

“If everybody is using AI, then all the news sounds the same,” said participant Debra.

McBride agreed.

“The internet and social media and AI all drive things toward the middle — which can be a really mediocre place to be,” she said. “I think about this with writing a lot. There is a lot of just uninspired, boring writing out there on the internet, and I haven’t seen anything created by AI that I would consider to be a joy to read or absolutely compelling.”

Mahadevan also said it is difficult for many people to get past the “uncanny valley” effect of AI avatars and voices; they appear human, but consumers know something is off.

“It still feels very strange to me to hear an AI woman reading a New York Times article. She makes mistakes in the intonation of her voice and the way she speaks,” he said. “I can get people’s hesitation because it feels very alienating to have a robot talking to you.”

Said focus group member Tony: “That’s my main concern globally about what we’re talking about. The human element. Hopefully, that isn’t taken over by artificial intelligence, or it becomes so powerful that it doesn’t do a lot of these tasks, human tasks, you know? I think a lot of things have to remain human, whether it be error or perfection. The human element has to remain.”

Helping newsrooms move forward

Poynter has been focusing on the ethical issues for journalists using AI in their work. In March, Poynter published a “starter kit” framework for newsrooms that needed to create an ethics policy around their AI use that was written by McBride, Mahadevan and faculty member Tony Elkins. After the recent AI summit, Poynter experts will be updating and adding to that framework.

Those changes are likely to include addressing data privacy, which was a theme at the summit, though not as prevalent in the focus groups.

KELLY McBRIDE

SVP, Chair, Craig Newmark Center for Ethics and Leadership

“AI is likely to create an exchange of information between the news provider and the news consumer in ways that allow the news to be personalized,” McBride said. “But we’re going to have to be very clear in the way we’re going to guard people’s privacy and not abuse that data.”

Mahadevan said he would like to spend more time helping newsrooms address disclosure.

“I think what I’d like to work on is a more universal disclosure based on some of the feedback we got from the audience — some more guidance on how to craft a non-fatigue-inducing disclosure.”

Toff still has more to glean from the focus group results. But the consumers’ attitudes may hold some important insights for the future of financially struggling news organizations.

As AI advances, it seems highly likely to deliver news and information directly to consumers while reducing their connection to news organizations that produced the information in the first place.

“People did talk about some of the ways they could see these tools making it easier to keep up with news, but that meant keeping up with the news in ways they already were aware they weren’t paying attention to who reported what,” Toff said.

Still, somewhat hopefully for journalists, several focus group members expressed great concern for the important human role in producing good journalism.

“A number of people raised questions about the limitations of these technologies and whether there were aspects of journalism that you really shouldn’t replace with a machine,” Toff said. “Connecting the dots and uncovering information — there’s a recognition there’s a real need for on-the-ground human reporters in ways there is a lot of skepticism these tools could ever produce.”